Breaking down Spider searches

New user to weblog and so far I'm loving all the information it gives, great product.

Couple of quick questions though. I'm basically doing a a group of logs, and have a filter thats setup to just grab the spider googlebot.

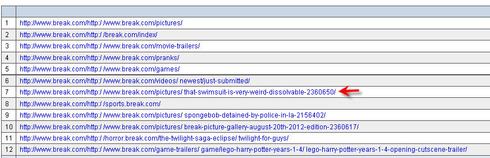

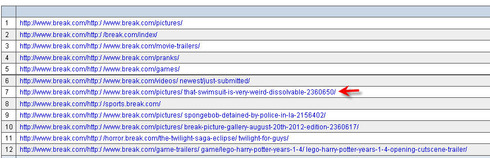

1. When i see the results i'm getting http://www.mydomain.com/http:/ /www.mydomain.com/pictures/that-swimsu...

is it suppose to list the domain in that format? almost looks like its adding information, so instead of just saying http://www.mydomain.com/pictures//tha... it adds http://www.mydomain.com to the start of it? ?

2. Is it possible to do a report that basically takes all the googlebot information and then also just takes the url I want and dispaly them in a report.

Example:

Lets say I have 20 URL that all say something like

www.mydomain.com/pictures/a

www.mydomain.com/pictures/b

www.mydomain.com/pictures/c

www.mydomain.com/pictures/d

and so

Instead of adding them up manually can i just tell the weblog to run a report that says okay googlebot basically hit www.mydomain.com/pictures/ directory and adds up all the hits instead of showing me every single different URL.

Hope that makes sense.

Couple of quick questions though. I'm basically doing a a group of logs, and have a filter thats setup to just grab the spider googlebot.

1. When i see the results i'm getting http://www.mydomain.com/http:/ /www.mydomain.com/pictures/that-swimsu...

is it suppose to list the domain in that format? almost looks like its adding information, so instead of just saying http://www.mydomain.com/pictures//tha... it adds http://www.mydomain.com to the start of it? ?

2. Is it possible to do a report that basically takes all the googlebot information and then also just takes the url I want and dispaly them in a report.

Example:

Lets say I have 20 URL that all say something like

www.mydomain.com/pictures/a

www.mydomain.com/pictures/b

www.mydomain.com/pictures/c

www.mydomain.com/pictures/d

and so

Instead of adding them up manually can i just tell the weblog to run a report that says okay googlebot basically hit www.mydomain.com/pictures/ directory and adds up all the hits instead of showing me every single different URL.

Hope that makes sense.

When you create the table, choose "Directory" as main data. Click the "Custom..." button to the right of it and enter the following value:

http://www.break.com/(.*?)/ ~= \1/

This value should remove the extra "http://www.break.com/" part in this report and will also report agreggated statistics on top-level directories only.

You also need and create an include "Spider" filter with value * in the table properties. So the table will include statistics on all spider requests for files inside reported directories.

If the rule doesn't work, could you send a sample log file from your site to us at support@weblogexpert.com ? You can also find information on how to create the rules at http://www.weblogexpert.com/help/wlex...